UK becoming a hub for AI cybersecurity

Analysis of research results from the Government’s AI and Software cybersecurity market analysis report indicate that 48% of AI cybersecurity providers are headquartered in the United Kingdom, followed by the United States (38%), Ireland (6%), Israel (3%), Romania (2%), India (2%) and France (2%). The research by OnSecurity reveals that among software security providers, the United Kingdom continues to see […]

IN FOCUS: Commercial physical security trends for 2025

With cyber security being such a hot-button topic and 2024 seeing major cyber attacks on organisations like the NHS and CrowdStrike that caused significant disruptions, it’s easy to forget that physical security for businesses and commercial properties is just as important and needs just as much attention. To bring focus to the future of this area in the security industry, the […]

PRODUCT SPOTLIGHT: Ontinue ION

Ontinue ION is the managed extended detection and response service of choice for Microsoft security customers. ION leverages an AI-powered platform, human expertise and our customers’ own Microsoft tools to deliver tailored protection that conforms to your environment and operations. The result is fast threat detection and response, and continuous security posture hardening. With ION handling […]

Plugging security gaps with AI without creating sdditional risk

AI has the potential to transform every aspect of business, from security to productivity. Yet companies’ headlong, unmanaged, rush to exploit innovation is creating unknown and understood risks that require urgent oversight, argues Mark Grindey (pictured), CEO, Zeus Cloud… Business Potential Generative AI (Gen AI) tools are fast becoming a core component of any business’ strategy – […]

Employers not prepared for Ai threats to data security

The majority of businesses acknowledge AI’s potential risks, yet only a small portion have established comprehensive controls surrounding its use. This is according to new findings from Sapio Research examining both consumer and employer attitudes towards AI across Europe. The report surveyed 800 consumers and 375 business decision makers responsible for their finance department, with respondents from […]

Shortage of high-quality data ‘threatens AI boom’

An Open Data Institute (ODI) white paper has identified what it says are significant weaknesses in the UK’s tech infrastructure that threaten the predicted potential gains – for people, society, and the economy – from the AI boom. It also outlines the ODI’s recommendations for creating diverse, fair data-centric AI. Based on its research, the ODI […]

How Artificial Intelligence is enhancing physical security in the UK’s commercial and public sectors

Security threats are growing in complexity, demanding advanced solutions. Artificial Intelligence (AI) is increasingly playing a pivotal role in reshaping the security landscape within the UK’s commercial and public sectors. By integrating AI, organisations can bolster their physical security measures to unprecedented levels. Let’s delve into the specific ways AI is being leveraged… Intelligent Video […]

Study show generative AI now an ’emerging risk’ for enterprise

The mass availability of generative AI, such as OpenAI’s ChatGPT and Google Bard, became a top concern for enterprise risk executives in the second quarter of 2023. “Generative AI was the second most-frequently named risk in our second quarter survey, appearing in the top 10 for the first time,” said Ran Xu director, research in […]

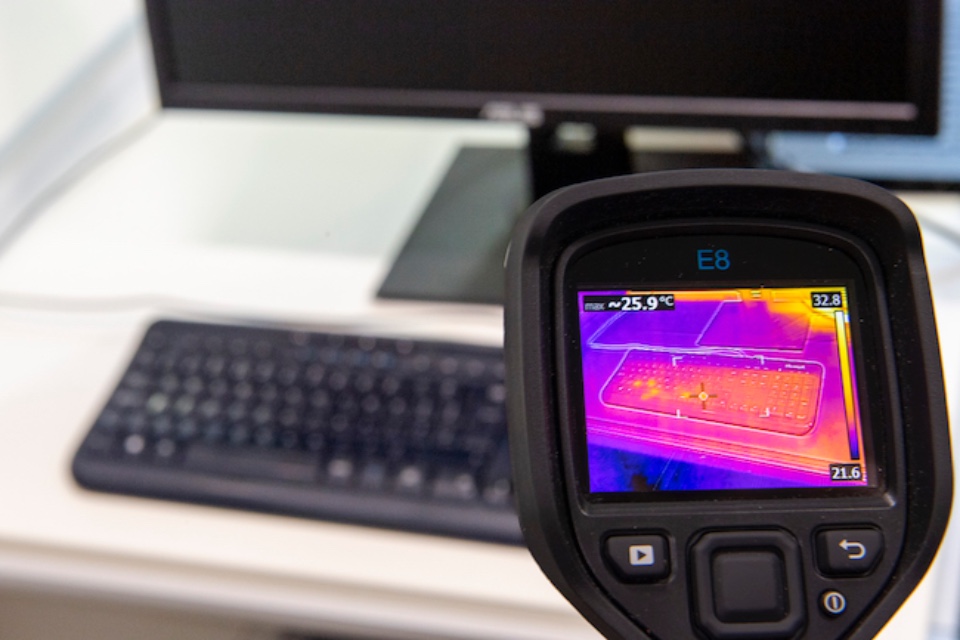

University of Glasgow tackles thermal attack personal data threat using AI

A team of computer security experts at the University of Glasgow have developed a set of recommendations to help defend against ‘thermal attacks’ which can steal personal information. Thermal attacks use heat-sensitive cameras to read the traces of fingerprints left on surfaces like smartphone screens, computer keyboards and PIN pads. Hackers can use the relative […]

Could AI-generated ‘synthetic data’ be about to take off in the security space?

Synthetic data startups are spearheading a revolution in artificial intelligence (AI) by redefining the landscape of data generation that will have implications for myriad industries, including security. That’s according to GlobalData, which says substantial venture capital investments and a clear sense of direction, these startups are transforming industries, overcoming data limitations, and propelling AI innovation […]